Estimating or specifying parameters in state space models¶

In this notebook we show how to fix specific values of certain parameters in statsmodels’ state space models while estimating others.

In general, state space models allow users to:

Estimate all parameters by maximum likelihood

Fix some parameters and estimate the rest

Fix all parameters (so that no parameters are estimated)

[1]:

%matplotlib inline

from importlib import reload

import numpy as np

import pandas as pd

import statsmodels.api as sm

import matplotlib.pyplot as plt

from pandas_datareader.data import DataReader

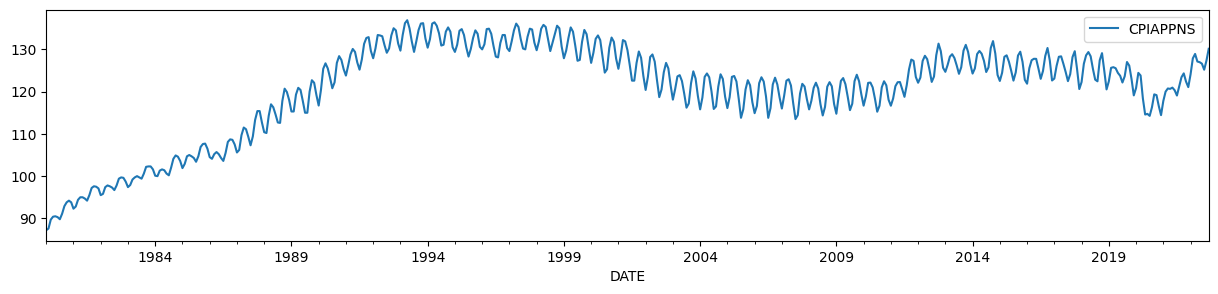

To illustrate, we will use the Consumer Price Index for Apparel, which has a time-varying level and a strong seasonal component.

[2]:

endog = DataReader('CPIAPPNS', 'fred', start='1980').asfreq('MS')

endog.plot(figsize=(15, 3));

It is well known (e.g. Harvey and Jaeger [1993]) that the HP filter output can be generated by an unobserved components model given certain restrictions on the parameters.

The unobserved components model is:

For the trend to match the output of the HP filter, the parameters must be set as follows:

where

[3]:

# Run the HP filter with lambda = 129600

hp_cycle, hp_trend = sm.tsa.filters.hpfilter(endog, lamb=129600)

# The unobserved components model above is the local linear trend, or "lltrend", specification

mod = sm.tsa.UnobservedComponents(endog, 'lltrend')

print(mod.param_names)

['sigma2.irregular', 'sigma2.level', 'sigma2.trend']

The parameters of the unobserved components model (UCM) are written as:

To satisfy the above restrictions, we will set

Since we are fixing all parameters here, we do not need to use the fit method at all, since that method is used to perform maximum likelihood estimation. Instead, we can directly run the Kalman filter and smoother at our chosen parameters using the smooth method.

[4]:

res = mod.smooth([1., 0, 1. / 129600])

print(res.summary())

Unobserved Components Results

==============================================================================

Dep. Variable: CPIAPPNS No. Observations: 513

Model: local linear trend Log Likelihood -2788.429

Date: Wed, 02 Nov 2022 AIC 5582.858

Time: 17:08:17 BIC 5595.567

Sample: 01-01-1980 HQIC 5587.840

- 09-01-2022

Covariance Type: opg

====================================================================================

coef std err z P>|z| [0.025 0.975]

------------------------------------------------------------------------------------

sigma2.irregular 1.0000 0.009 108.686 0.000 0.982 1.018

sigma2.level 0 0.000 0 1.000 -0.000 0.000

sigma2.trend 7.716e-06 2.06e-07 37.535 0.000 7.31e-06 8.12e-06

===================================================================================

Ljung-Box (L1) (Q): 229.15 Jarque-Bera (JB): 1.27

Prob(Q): 0.00 Prob(JB): 0.53

Heteroskedasticity (H): 2.30 Skew: 0.02

Prob(H) (two-sided): 0.00 Kurtosis: 2.76

===================================================================================

Warnings:

[1] Covariance matrix calculated using the outer product of gradients (complex-step).

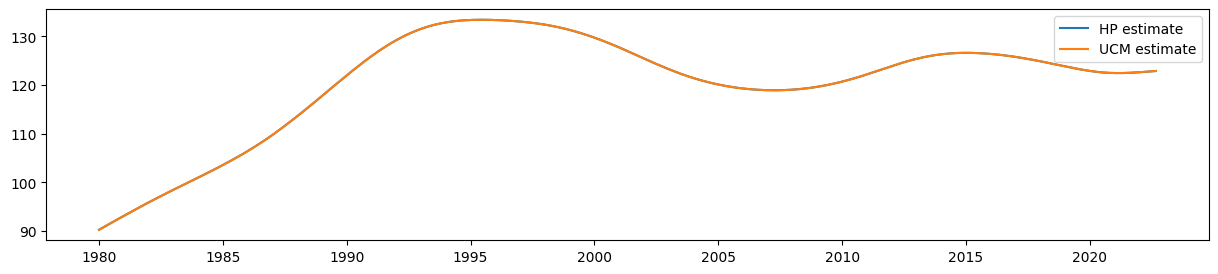

The estimate that corresponds to the HP filter’s trend estimate is given by the smoothed estimate of the level (which is

[5]:

ucm_trend = pd.Series(res.level.smoothed, index=endog.index)

It is easy to see that the estimate of the smoothed level from the UCM is equal to the output of the HP filter:

[6]:

fig, ax = plt.subplots(figsize=(15, 3))

ax.plot(hp_trend, label='HP estimate')

ax.plot(ucm_trend, label='UCM estimate')

ax.legend();

Adding a seasonal component¶

However, unobserved components models are more flexible than the HP filter. For example, the data shown above is clearly seasonal, but with time-varying seasonal effects (the seasonality is much weaker at the beginning than at the end). One of the benefits of the unobserved components framework is that we can add a stochastic seasonal component. In this case, we will estimate the variance of the seasonal component by maximum likelihood while still including the restriction on the parameters implied above so that the trend corresponds to the HP filter concept.

Adding the stochastic seasonal component adds one new parameter, sigma2.seasonal.

[7]:

# Construct a local linear trend model with a stochastic seasonal component of period 1 year

mod = sm.tsa.UnobservedComponents(endog, 'lltrend', seasonal=12, stochastic_seasonal=True)

print(mod.param_names)

['sigma2.irregular', 'sigma2.level', 'sigma2.trend', 'sigma2.seasonal']

In this case, we will continue to restrict the first three parameters as described above, but we want to estimate the value of sigma2.seasonal by maximum likelihood. Therefore, we will use the fit method along with the fix_params context manager.

The fix_params method takes a dictionary of parameters names and associated values. Within the generated context, those parameters will be used in all cases. In the case of the fit method, only the parameters that were not fixed will be estimated.

[8]:

# Here we restrict the first three parameters to specific values

with mod.fix_params({'sigma2.irregular': 1, 'sigma2.level': 0, 'sigma2.trend': 1. / 129600}):

# Now we fit any remaining parameters, which in this case

# is just `sigma2.seasonal`

res_restricted = mod.fit()

RUNNING THE L-BFGS-B CODE

* * *

Machine precision = 2.220D-16

N = 1 M = 10

At X0 0 variables are exactly at the bounds

At iterate 0 f= 3.65530D+00 |proj g|= 2.09914D-01

At iterate 5 f= 3.07793D+00 |proj g|= 8.21054D-06

* * *

Tit = total number of iterations

Tnf = total number of function evaluations

Tnint = total number of segments explored during Cauchy searches

Skip = number of BFGS updates skipped

Nact = number of active bounds at final generalized Cauchy point

Projg = norm of the final projected gradient

F = final function value

* * *

N Tit Tnf Tnint Skip Nact Projg F

1 5 11 1 0 0 8.211D-06 3.078D+00

F = 3.0779342481049738

CONVERGENCE: NORM_OF_PROJECTED_GRADIENT_<=_PGTOL

This problem is unconstrained.

Alternatively, we could have simply used the fit_constrained method, which also accepts a dictionary of constraints:

[9]:

res_restricted = mod.fit_constrained({'sigma2.irregular': 1, 'sigma2.level': 0, 'sigma2.trend': 1. / 129600})

RUNNING THE L-BFGS-B CODE

* * *

Machine precision = 2.220D-16

N = 1 M = 10

At X0 0 variables are exactly at the bounds

At iterate 0 f= 3.65530D+00 |proj g|= 2.09914D-01

This problem is unconstrained.

At iterate 5 f= 3.07793D+00 |proj g|= 8.21054D-06

* * *

Tit = total number of iterations

Tnf = total number of function evaluations

Tnint = total number of segments explored during Cauchy searches

Skip = number of BFGS updates skipped

Nact = number of active bounds at final generalized Cauchy point

Projg = norm of the final projected gradient

F = final function value

* * *

N Tit Tnf Tnint Skip Nact Projg F

1 5 11 1 0 0 8.211D-06 3.078D+00

F = 3.0779342481049738

CONVERGENCE: NORM_OF_PROJECTED_GRADIENT_<=_PGTOL

The summary output includes all parameters, but indicates that the first three were fixed (and so were not estimated).

[10]:

print(res_restricted.summary())

Unobserved Components Results

=====================================================================================

Dep. Variable: CPIAPPNS No. Observations: 513

Model: local linear trend Log Likelihood -1578.980

+ stochastic seasonal(12) AIC 3159.961

Date: Wed, 02 Nov 2022 BIC 3164.175

Time: 17:08:18 HQIC 3161.614

Sample: 01-01-1980

- 09-01-2022

Covariance Type: opg

============================================================================================

coef std err z P>|z| [0.025 0.975]

--------------------------------------------------------------------------------------------

sigma2.irregular (fixed) 1.0000 nan nan nan nan nan

sigma2.level (fixed) 0 nan nan nan nan nan

sigma2.trend (fixed) 7.716e-06 nan nan nan nan nan

sigma2.seasonal 0.0977 0.009 11.371 0.000 0.081 0.115

===================================================================================

Ljung-Box (L1) (Q): 427.41 Jarque-Bera (JB): 49.94

Prob(Q): 0.00 Prob(JB): 0.00

Heteroskedasticity (H): 2.43 Skew: 0.23

Prob(H) (two-sided): 0.00 Kurtosis: 4.48

===================================================================================

Warnings:

[1] Covariance matrix calculated using the outer product of gradients (complex-step).

For comparison, we construct the unrestricted maximum likelihood estimates (MLE). In this case, the estimate of the level will no longer correspond to the HP filter concept.

[11]:

res_unrestricted = mod.fit()

RUNNING THE L-BFGS-B CODE

* * *

Machine precision = 2.220D-16

N = 4 M = 10

At X0 0 variables are exactly at the bounds

At iterate 0 f= 3.63572D+00 |proj g|= 1.06815D-01

This problem is unconstrained.

At iterate 5 f= 2.03262D+00 |proj g|= 4.18918D-01

At iterate 10 f= 1.62777D+00 |proj g|= 1.40075D+00

At iterate 15 f= 1.53457D+00 |proj g|= 1.18624D-02

At iterate 20 f= 1.53105D+00 |proj g|= 4.43139D-02

At iterate 25 f= 1.44441D+00 |proj g|= 3.53844D-01

At iterate 30 f= 1.39156D+00 |proj g|= 2.32794D-01

At iterate 35 f= 1.38532D+00 |proj g|= 2.75610D-03

At iterate 40 f= 1.38532D+00 |proj g|= 9.53104D-06

* * *

Tit = total number of iterations

Tnf = total number of function evaluations

Tnint = total number of segments explored during Cauchy searches

Skip = number of BFGS updates skipped

Nact = number of active bounds at final generalized Cauchy point

Projg = norm of the final projected gradient

F = final function value

* * *

N Tit Tnf Tnint Skip Nact Projg F

4 40 68 1 0 0 9.531D-06 1.385D+00

F = 1.3853189469230238

CONVERGENCE: NORM_OF_PROJECTED_GRADIENT_<=_PGTOL

Finally, we can retrieve the smoothed estimates of the trend and seasonal components.

[12]:

# Construct the smoothed level estimates

unrestricted_trend = pd.Series(res_unrestricted.level.smoothed, index=endog.index)

restricted_trend = pd.Series(res_restricted.level.smoothed, index=endog.index)

# Construct the smoothed estimates of the seasonal pattern

unrestricted_seasonal = pd.Series(res_unrestricted.seasonal.smoothed, index=endog.index)

restricted_seasonal = pd.Series(res_restricted.seasonal.smoothed, index=endog.index)

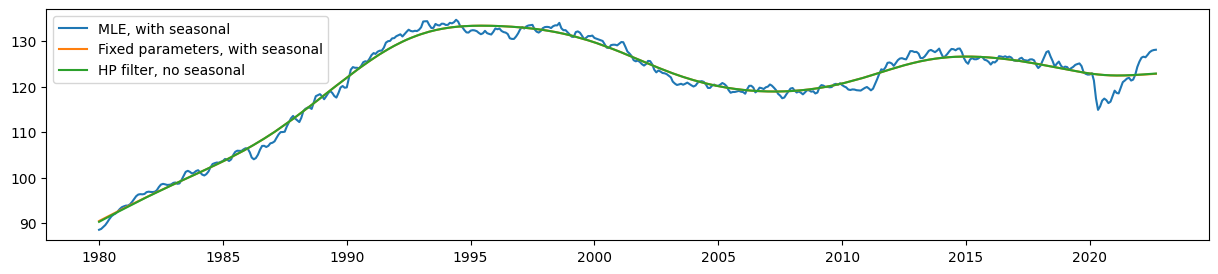

Comparing the estimated level, it is clear that the seasonal UCM with fixed parameters still produces a trend that corresponds very closely (although no longer exactly) to the HP filter output.

Meanwhile, the estimated level from the model with no parameter restrictions (the MLE model) is much less smooth than these.

[13]:

fig, ax = plt.subplots(figsize=(15, 3))

ax.plot(unrestricted_trend, label='MLE, with seasonal')

ax.plot(restricted_trend, label='Fixed parameters, with seasonal')

ax.plot(hp_trend, label='HP filter, no seasonal')

ax.legend();

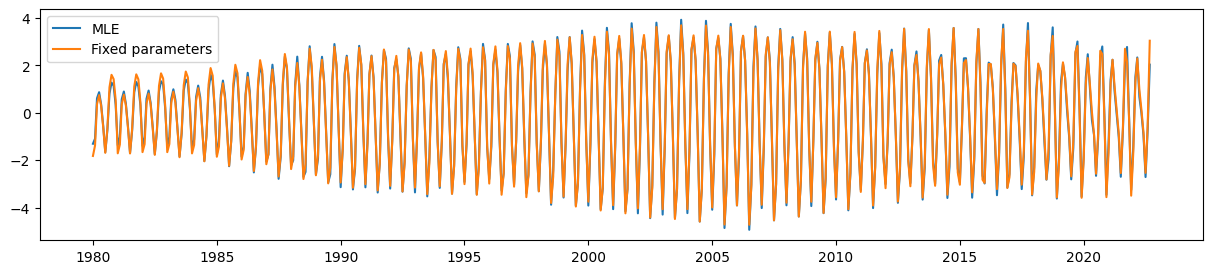

Finally, the UCM with the parameter restrictions is still able to pick up the time-varying seasonal component quite well.

[14]:

fig, ax = plt.subplots(figsize=(15, 3))

ax.plot(unrestricted_seasonal, label='MLE')

ax.plot(restricted_seasonal, label='Fixed parameters')

ax.legend();